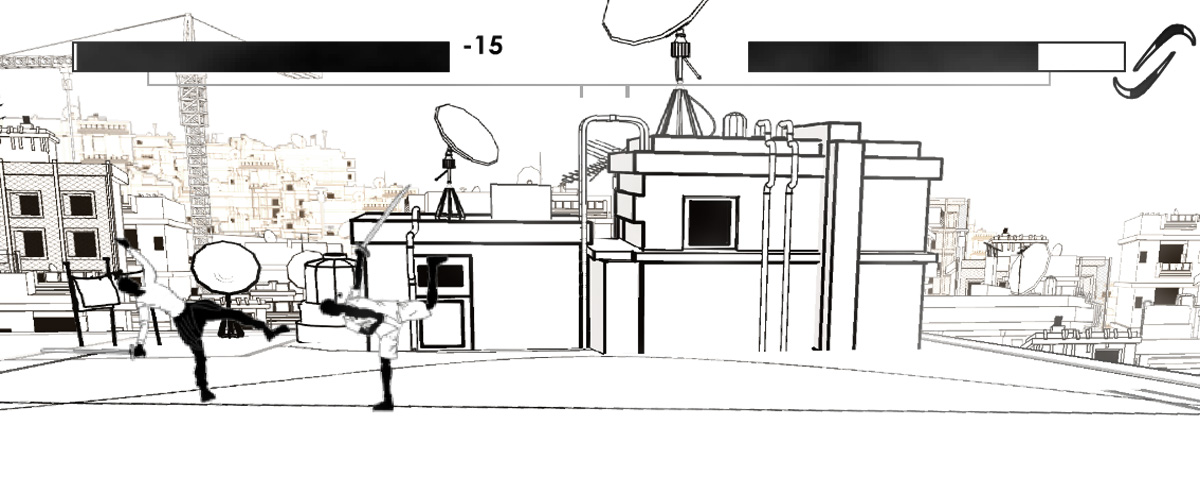

The project is: A Distributed, Interactive, Digital, Public Installation planned for Beirut.

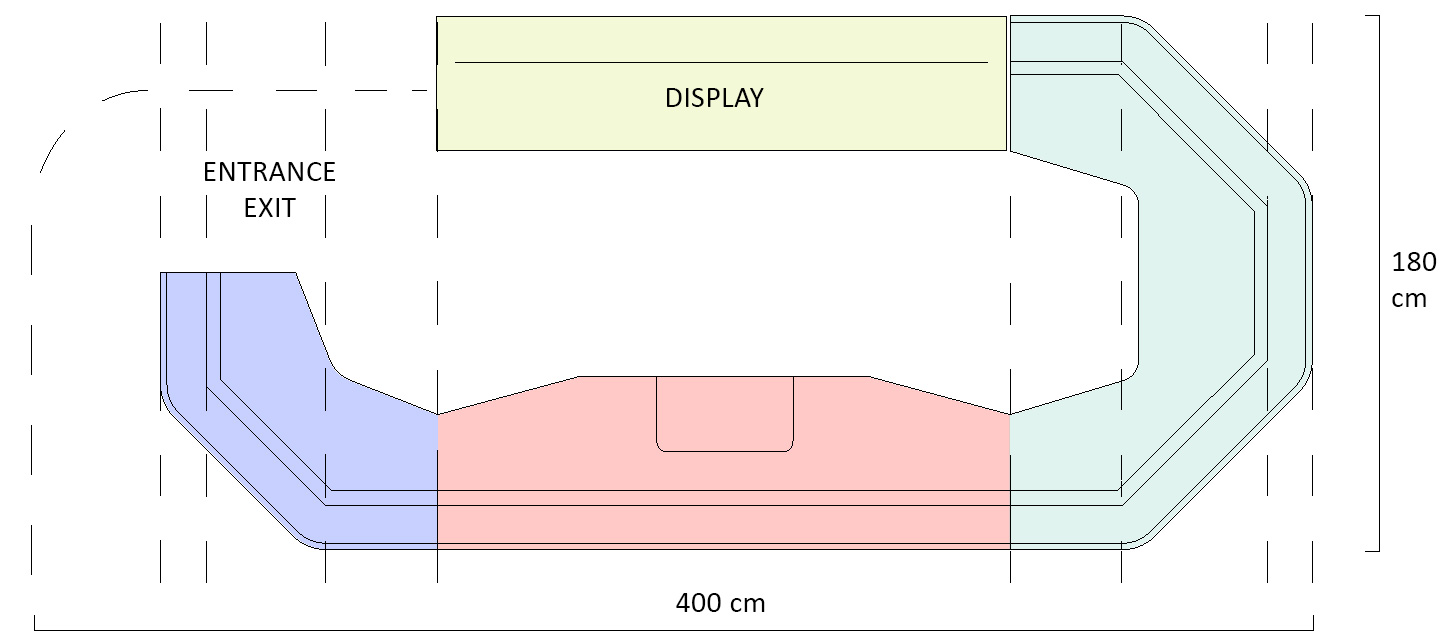

Physical "window-like" panels are placed at specific spots on the sidewalks of Beirut’s downtown, giving pedestrian access to interactively visualize and explore Phoenician era scenes, using Augmented Reality.

4 scenes are distributed around, seen through their physical markers.

Markers types, placement and orientation affects the augmented scene.

Markers types, placement and orientation affects the augmented scene.

- A Canaanite bedroom/house. From a window’s vantage view.

- A village’s water source (ain). From a crevice in the rock’s vantage point.

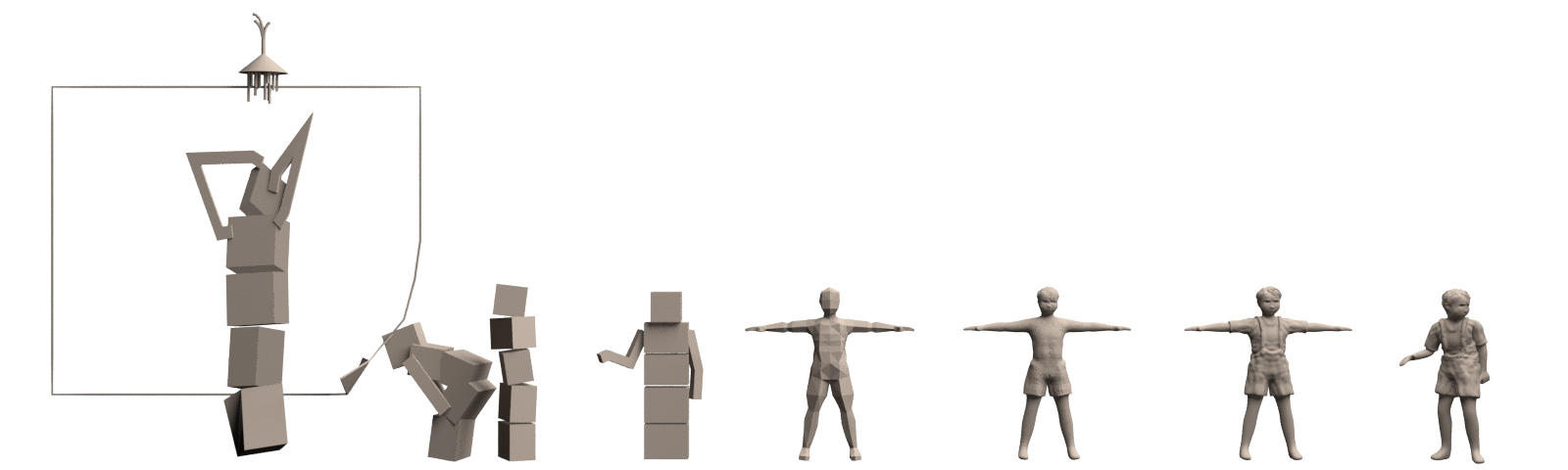

- Temple’s pavilion, with a few animated children. From the pavilion’s ceiling vantage point.

- To be determined during development.

Once an exploration of an area is done, its scene is locally stored can be 'searched' for hidden artifacts, some of which are coupon codes for nearby opt-in shops.

'Theoretical' opt-in shops.

'Theoretical' opt-in shops.

Photo captures of AR interaction and local exploration are made easy to perform and share from the app.

Exploration of all 4 locations reveals additional artifacts.

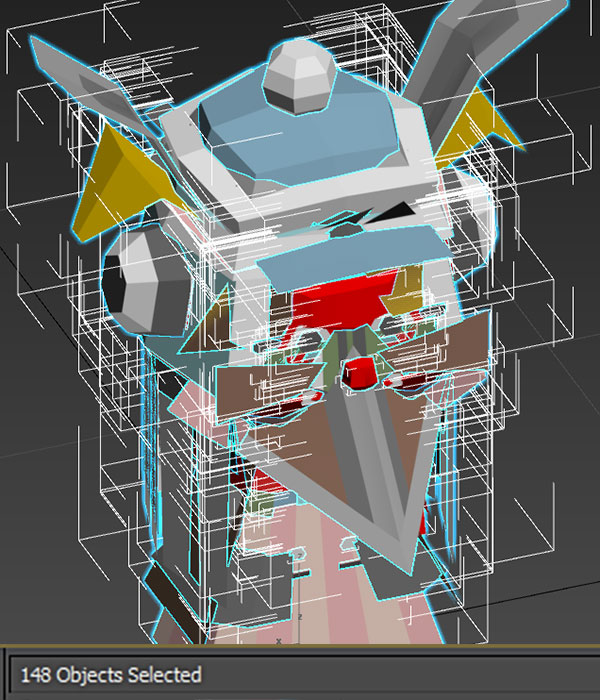

- GLSL programming (Window masking, scene blending, etc.).

-

Perceptual correctness and visual coherence of mixed digital and physical.

Interesting articles on that: - A public installations is planned and executed with the intention of being staged in the physical public domain, and being freely accessible to all.

- Collaborative shaping/building/terraforming of a scene.

To be completed.

Photo: Panorama of 'Cité du design'

Photo: Panorama of 'Cité du design'

,

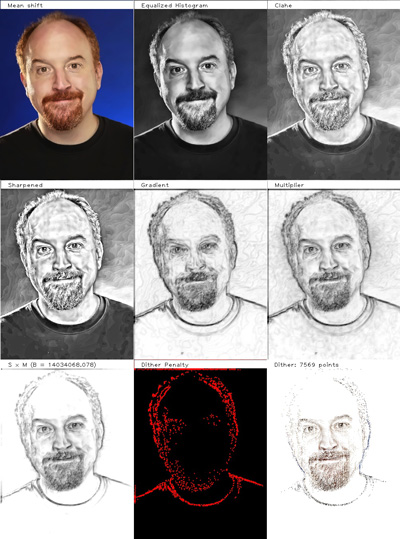

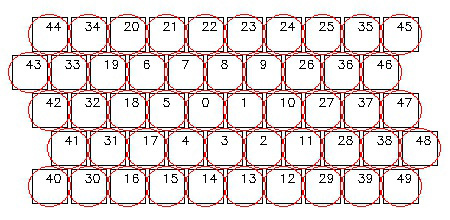

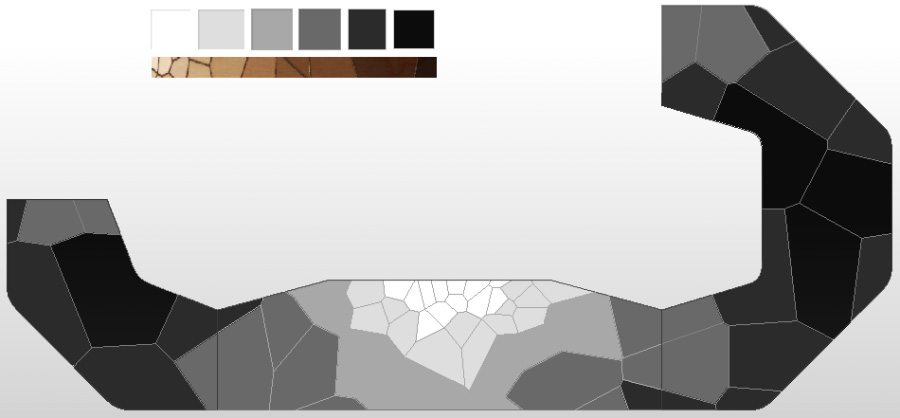

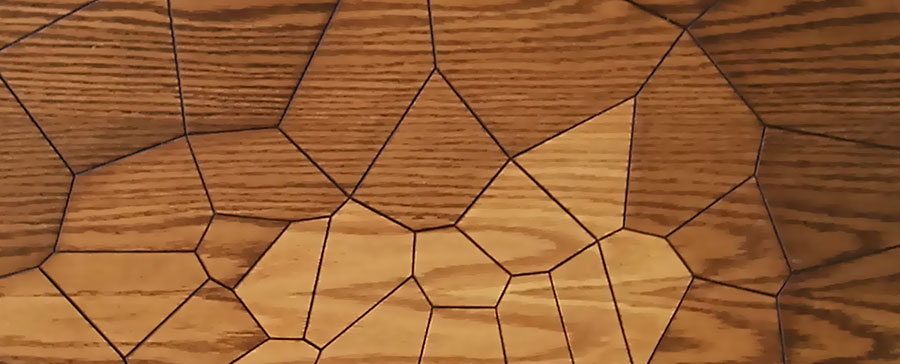

(or similar ones) can be found almost anywhere: cloth creases, tiling, reflections, light interference in camera, etc.

,

(or similar ones) can be found almost anywhere: cloth creases, tiling, reflections, light interference in camera, etc.